image via New York School Talk

In late September of this year, AP News included a report from the state of Massachusetts showing declines on state standardized tests in both ELA and math performance. The report compared student performance on the MCAS (state test) from 2019 to the 2021 results and showed significant losses, particularly in elementary math.

This shouldn’t come as a surprise; other major disruptions like Hurricane Katrina have provided educators with a reliable road map of the fallout that occurs when education is suspended, even for short stretches. What’s interesting is what happened just before this information was released. The day prior, Massachusetts lawmakers held a hearing on a bill that would eliminate the state tests (MCAS) as a graduation requirement. The bill at issue would replace the standardized test with “a broader and democratically determined framework to measure school quality, along with more authentic forms of demonstrating student achievement” and create a grant program to help district stakeholders set goals for their schools and identify resources to help them meet those goals.

What I like about it is that it recognizes a key problem — the validity of state tests — and attempts to ameliorate it. What I like even more is that it’s trying something ambitious, something outside the box education has proscribed for itself since NCLB was enacted in the early 2000s. It’s trying something CMSi has recommended for years: Authentic Assessment.

The root problem here is whether a multiple choice instrument of any kind can really capture what a child has learned. If we want a child to be able to write an essay, what’s a more effective measure of whether they’ve mastered that ability: multiple choice questions about writing, thesis statements, supporting detail, and editing, or an essay they’ve written using structures and conventions they’ve learned? Does it seem faintly ridiculous that we would measure writing ability with multiple choice questions? I think so, but we see this all the time on standardized tests. Remember, for the testing companies, it’s easy to score a multiple choice test with fidelity and much harder (and more expensive) to score essays with the same level of consistency.

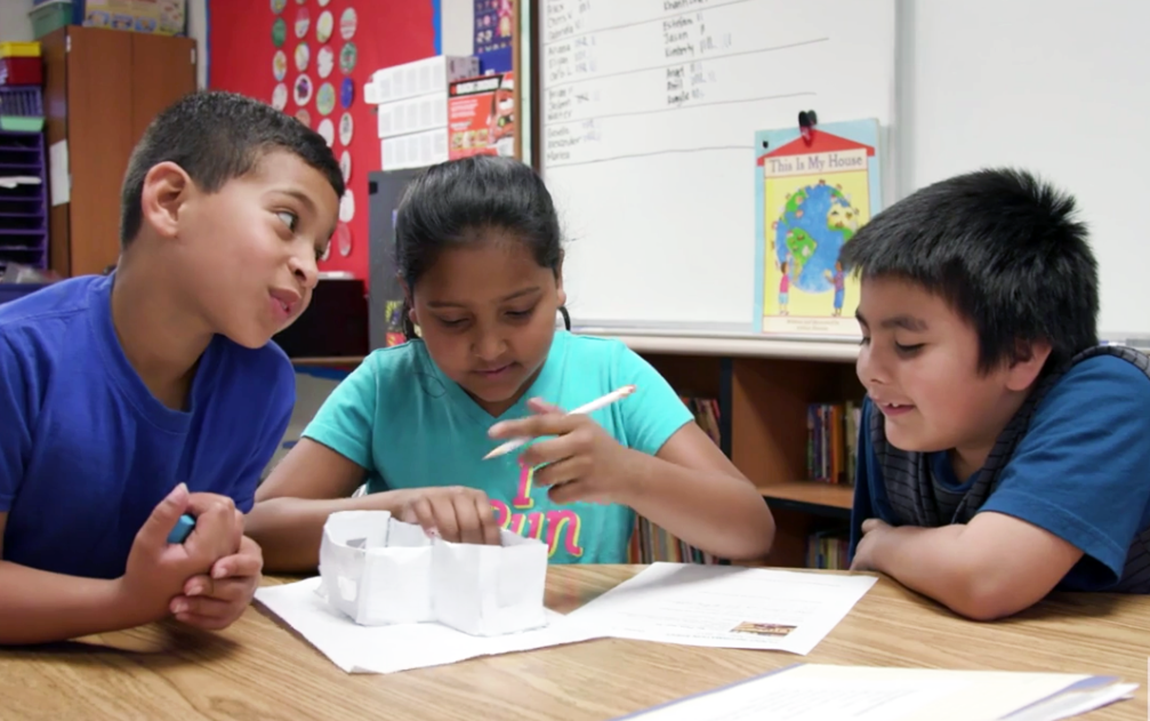

Let’s go even further. Rather than just writing an essay, what if students wrote essays about things that related to them or issues they cared about? And what if — as long as we’re dreaming — the actual assignment involved collecting real world data that they had to interpret and use to support a position or conclusion? Here’s an example from a real classroom that demonstrates what this could look like:

- A middle school Science class is learning about how to use time and distance to calculate speed. They are tasked with determining whether people are speeding through the school zone in front of their building. They spend several days figuring out how to measure speed by finding distances between points and coming up with a way to measure the time it takes cars to traverse the points, figuring out where those points should be in front of their school, measuring distances, timing passing cars, and collecting data on different days of the week at different times of day. They must then write a formal letter to the Principal outlining their results and recommending whether the school should invest in a speed camera or not, explaining how they measured everything and showing a visual representation of the data including the mean speed of the cars and a comparison of the different days and times when data were collected. They must use formal, academic language in their letter.

image via PBL Works

There’s quite a lot about this example that is radically different from most standardized tests. For a start, it integrates three separate content areas and can serve as an assessment of a science standard, a math standard, and a host of writing standards. Instead of being individual, it’s collaborative, requiring the skills and assistance of several students to conduct the experiment and report out the results instead of being a completely individual activity. It is highly engaging because it’s a real world situation that applies directly to the students and their immediate world, and it’s hands-on, requiring measuring, timing, and the placing of points, instead of abstract or theoretical. It’s also cognitively rigorous, requiring them to collect, analyze and represent the data to support a position (speed camera or no speed camera?) and synthesize that into a whole new product, the formal letter. It’s also time intensive, requiring many days to complete as opposed to a timed test, it requires more intensive teacher support and monitoring, rather than the light monitoring required by test proctoring, and it requires special tools and materials (stopwatches, cones, software, etc.) rather than just a bubble sheet and a number 2 pencil.

Is it logistically more difficult than a multiple choice test? Sure. But kids have a far greater chance of remembering this activity/assessment. It has concepts studied at depth over many days, while the bubble test is a 35 minute timed survey of things they remember (or don’t). In many ways, the more authentic assessment closely resembles how things work in businesses: collaboration, assigning people to specific tasks, combining findings into a single presentation or report, investigations or projects conducted over an extended time frame, conclusions requiring support generated through data analysis and interpretation.

It’s not entirely without its challenges. Teachers will have to structure activities carefully and monitor them closely to make sure no one child does the bulk of the work and that no child is left out of the project process because of perceived inadequacies. There will have to be careful attention to grading, with portions of the project graded individually and other parts grading the group as a whole. This has been successfully done in math and other content areas — it just requires a little extra thought on the part of the teacher. At the end, the teacher will have a much more precise, nuanced grasp of what concepts students have mastered. And the students will have experience with integrated problems where content areas bleed into each other instead of staying in neat, siloed boxes.

You know — like life.